1971: The Chip That Changed Everything — The Untold Story of How the Microprocessor Was Born.

October 18, 2025technology#review

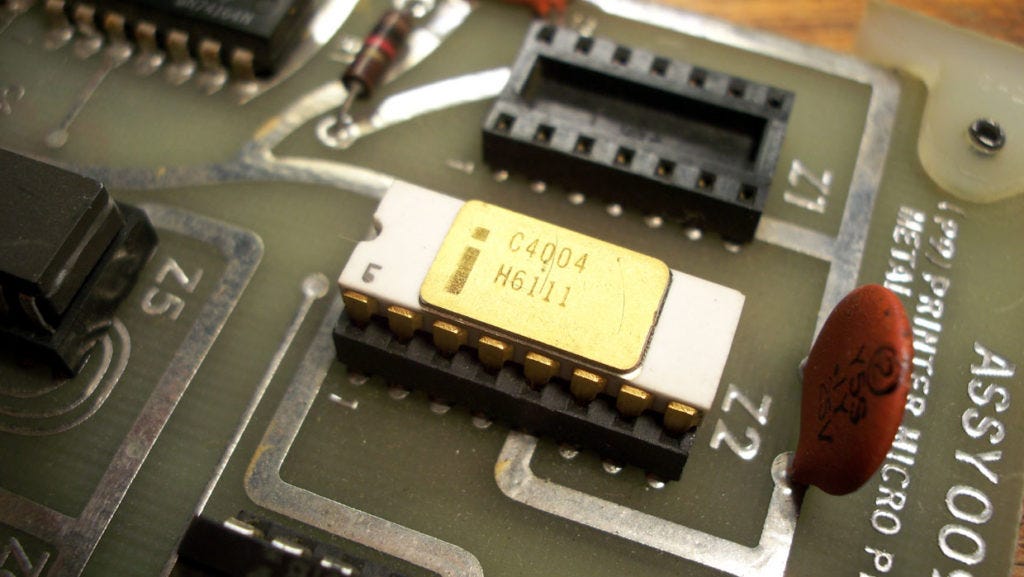

In the autumn of 1971, something small and quiet arrived that would change the shape of daily life for everyone on the planet. It was not a rocket launch or a major public announcement. It was a tiny piece of silicon, smaller than a fingernail, etched with a few thousand microscopic components and sealed inside a 16-pin package. That little piece of metal and sand became known as the Intel 4004, the world’s first commercial microprocessor.

At first glance, it looked simple. But within that small chip lived an idea so powerful that it would transform how humans interact with machines, information, and one another. What the world would come to call the digital revolution had quietly begun.

The story started when a Japanese calculator company called Busicom approached Intel in the late 1960s with a technical problem. They needed several custom chips to power a new line of programmable calculators. Building separate circuits for every function was costly and time-consuming. That challenge sparked an idea in the mind of a young engineer named Ted Hoff. What if, instead of designing many chips for specific tasks, one chip could perform all the necessary functions simply by being told what to do through software?

It was a bold idea. At that time, computers were enormous machines that filled entire rooms. The notion that a complete central processor could fit on a single chip seemed impossible.

Hoff’s concept caught the attention of two other brilliant engineers, Federico Faggin and Stan Mazor. Together with Masatoshi Shima from Busicom, they turned the concept into reality. Faggin, who had deep expertise in silicon design, led the physical development of the chip. After months of late nights, endless calculations, and delicate engineering work, the team produced a working prototype. In November 1971, Intel introduced the 4004 to the world.

The 4004 was modest by modern standards, a 4-bit processor with about 2,300 transistors capable of around 60,000 operations per second. Yet in that moment, history changed. For the first time, an entire central processing unit existed on a single chip. It could read instructions, perform arithmetic, store data, and control other components, all within a device small enough to balance on your fingertip.

The 4004 marked the beginning of something much larger than calculators. It showed that computing power could be mass-produced and embedded in almost anything. Within a decade, the concept evolved into the personal computer revolution. Within two decades, it powered cars, watches, and digital devices around the world. Today, billions of microprocessors run silently in everything from airplanes to refrigerators to phones.

Behind this discovery was a very human story. The engineers who built the 4004 were not chasing fame. They were solving a practical problem with creativity and limited tools. Working in modest offices in Santa Clara, California, they laid the foundation for what would later become Silicon Valley. Their teamwork and imagination turned one small project into a cornerstone of modern technology.

After finishing the Busicom project, Intel recognized that this new chip had enormous potential. The company made the bold decision to buy back the rights and sell the 4004 to other manufacturers. That decision transformed Intel from a small memory chip producer into a global leader in computing innovation.

The real power of the 4004 was not only in its design but in its vision. It proved that intelligence could be miniaturized, programmed, and shared. It took computing out of exclusive laboratories and made it accessible to the world.

Looking back, the late months of 1971 might seem quiet compared to the moon missions and political events of that era. Yet inside a small laboratory in California, a handful of determined engineers reshaped the future. They created the microprocessor, the heart of every digital device that defines modern life. And with that single spark of silicon, humanity entered a new age where creativity, knowledge, and technology could fit in the palm of a hand.